🧠 Concept

Ever seen the propeller of the plane that becomes a solid disc or warping on camera? Ever seen an LED panel with horizontal line flickering on camera?

When multiple still images are shown in quick succession, the brain interprets the rapid changes as continuous motion. For example, the specific frame rate chosen in movies and animation can produce animation that has different smooth movement effect.

The idea is to reveal a character or a pixel by flickering them on a different rate than the rest of the LEDs, and the flickering shall only be visible through the mediation of optical tool such as phone camera. The usage of phone camera is a conceptual decision as it comments on the modern tendency to take picture of everything (especially in a gallery/museum/exhibition) before actually observing the object physically with their eyes. The digital media (camera) precedes the physical media (eye).

In short, my idea is to interpret the pipeline of an Ouija board.

1. User asks question to the ghost by triggering an object (speaking, button)

2. The media (the code or the Ouija board) mediates the answer from the source (the ChatGPT or the ghost)

3. The code sends each character from the answer to Arduino

4. The Arduino writes the signal to the correesponding character

Research

> Human Eye Temporal Resolution

Human eyes can't distinguish flickering flashes above ~50Hz (1/50s per cycle), it will be too fast for the human eyes to perceive it as a ON/OFF pulse hence looks like a continuous light. This can be called as the effect of persistence of vision.

60 Hz translated to Arduino ms will be:

1000ms (1s) / 60 = 16.67ms per pulse (ON/OFF)

> Camera Temporal Resolution

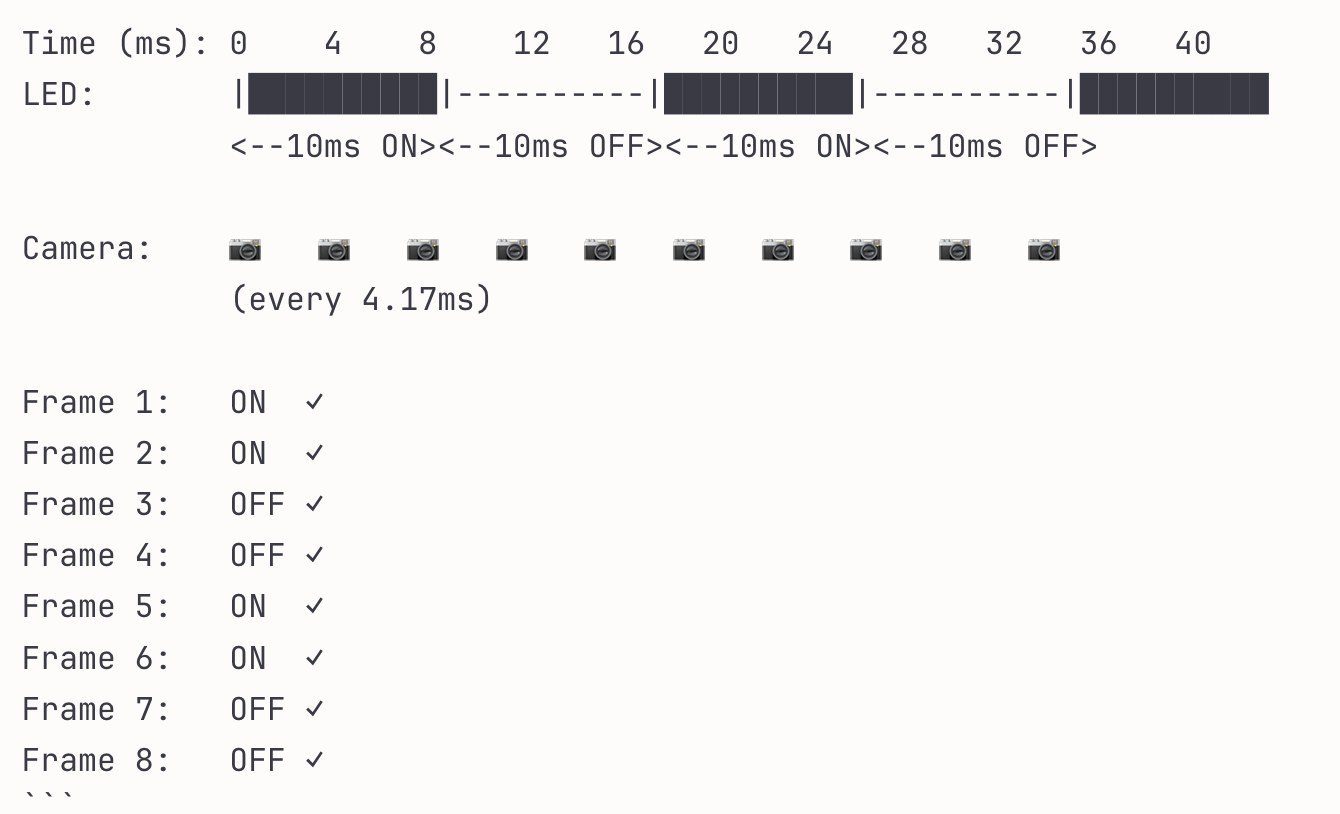

Frame Rate vs Exposure Time:

if we're using 240fps = one frame every 1000/240 = 4.1ms (maximum possible exposure)

but each frame has an exposure time or shutter speed, during that exposure the camera sensor integrates all incoming light. This is the part that's unknown on phone camera because it is typically on auto mode. But let's assume we have shutter speed of 1/1000 or 1ms.

During that 1ms of exposure, the camera sensor integrates all incoming light.

Assuming that the LED pulses every 10ms (ON/OFF), the camera will be able to pick up the LOW period.

But if we had coded the LED to pulse HIGH 95% and LOW 5%, it will only be LOW for 1ms and the camera may not be able to pick it up because the LOW pulse is so short.

The Nyquist Frequency

A signal can be reconstructed if it is sampled at a rate at least twice its highest frequency. The Nyquist frequency is half the sampling rate, and frequencies above this can cause aliasing effect.

Application:

- LED: 20ms or 1000/20 = 50 Hz

- Camera: >100fps (50*2)

🧱 Materials

- Paper, lots of paper

- 20 white LEDs for alphabets

- 20 47 Ohm resistors for each alphabet

- 1 push button to trigger listening on the web app

- 1 red LED for listening indicator

- Arduino Nano

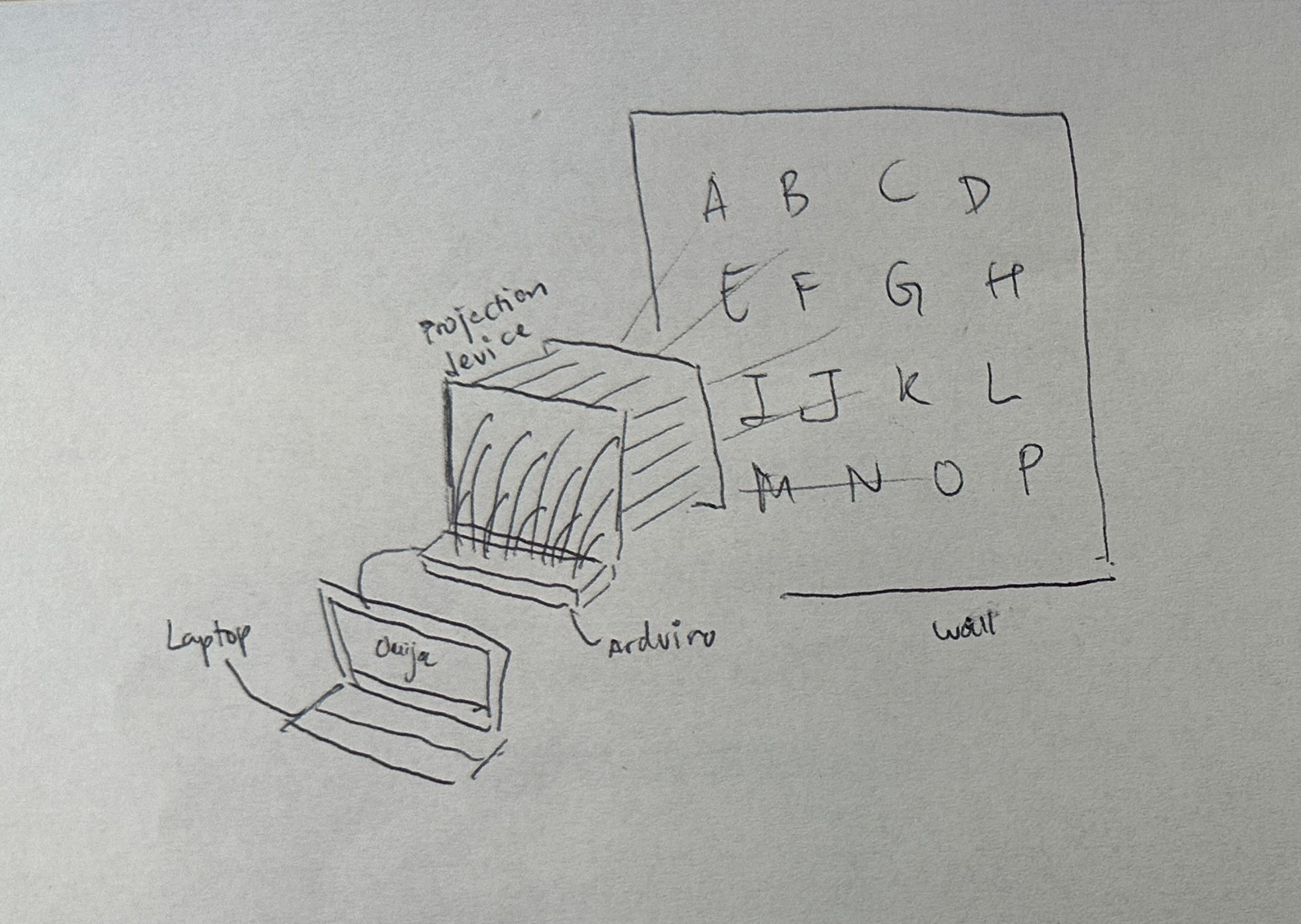

✏️ Sketch

1. Projecting the character on the wall

2. Covering the characters with semiopaque material as a projecting surface

3. Use keycaps as projection surface

🛠️ Behind The Scenes

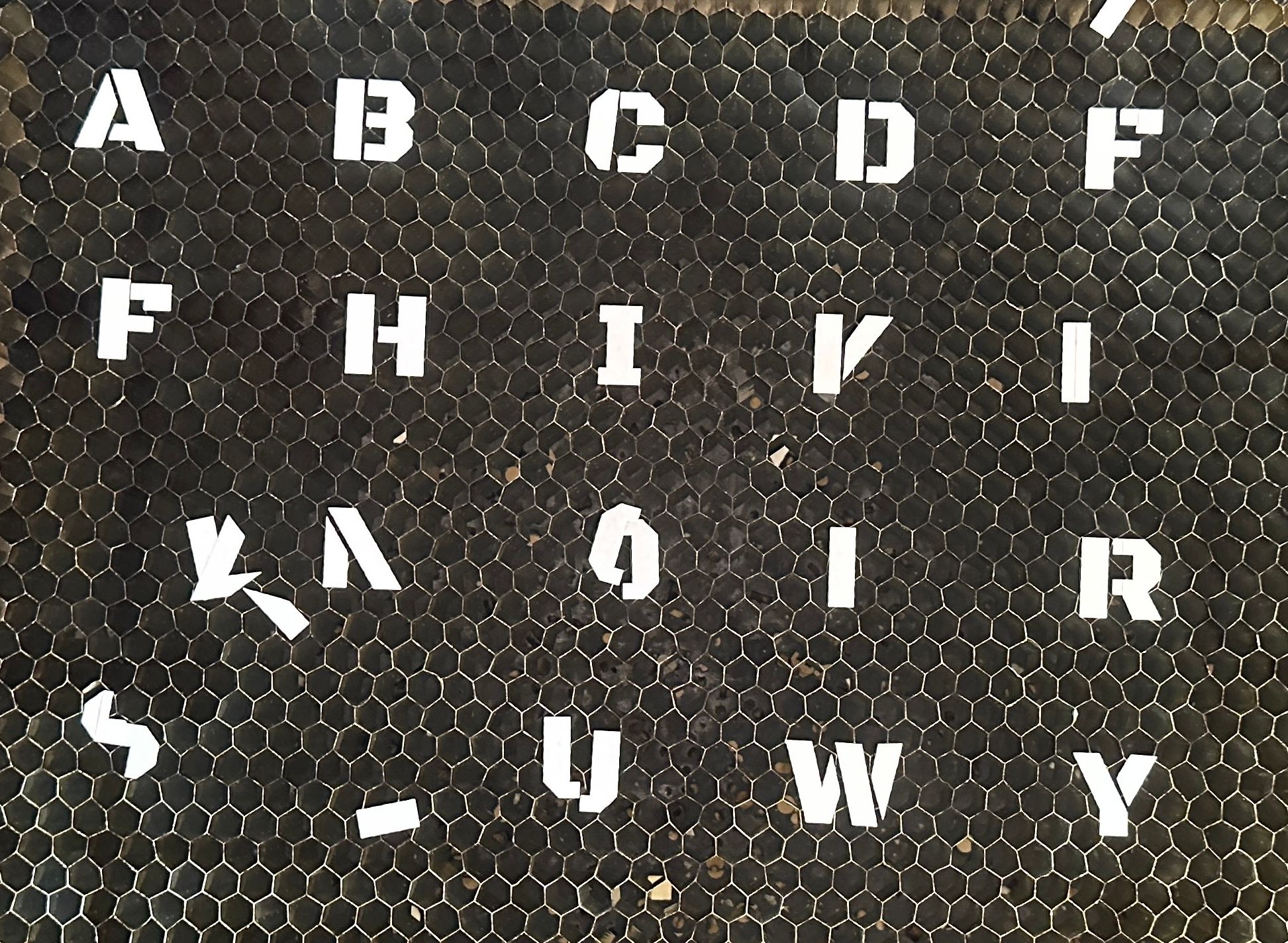

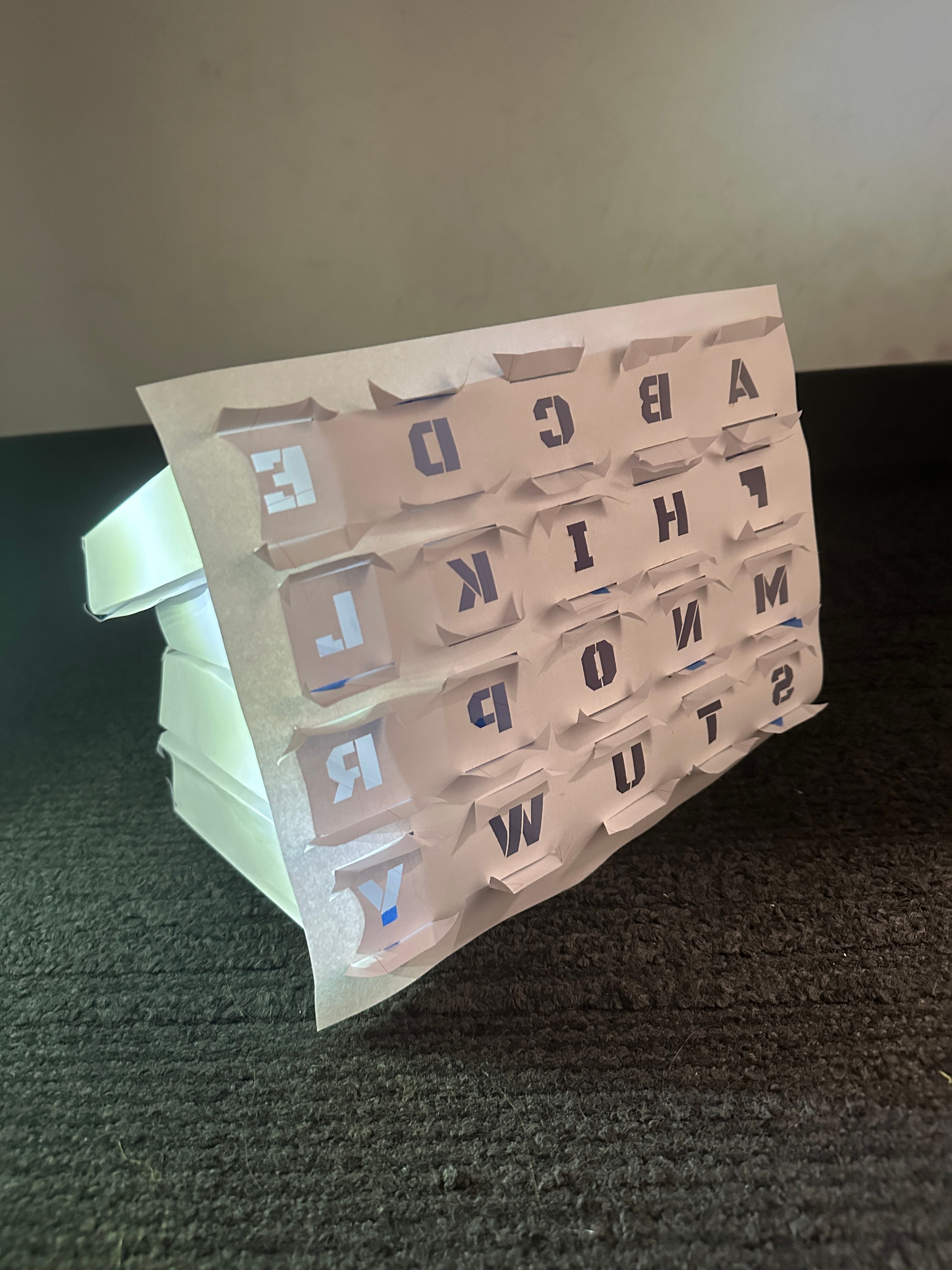

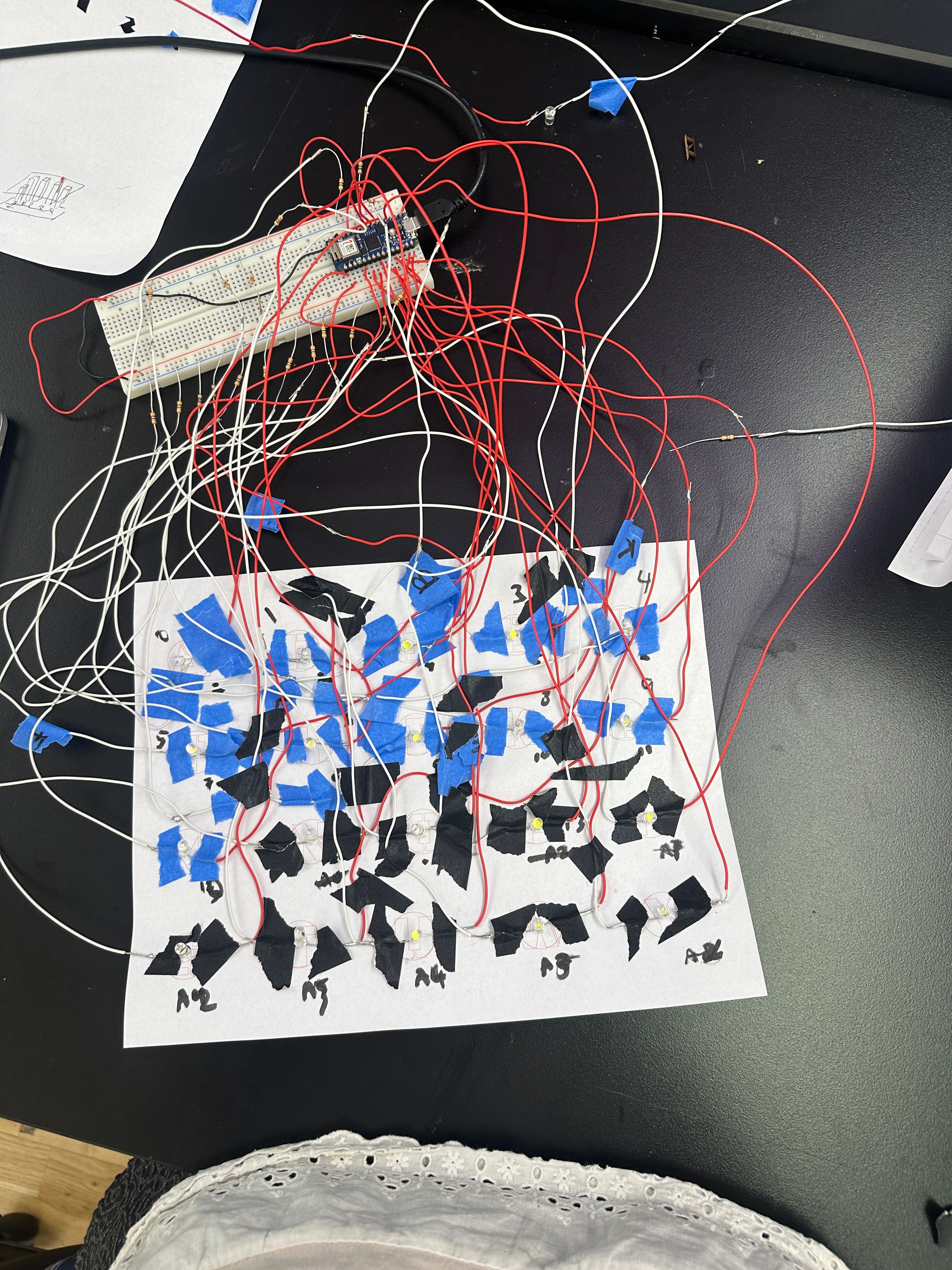

Lasercutting the alphabets

Avoiding the usage of glue by slipping in the paper inside paper to attach them

Problem encountered! Alphabets are clustered together. Abandoning this approach!

The wires catastrophe

Issues

While the flickering is not that visible on the eye with frequency under 50Hz, it still shows as dimming of brightness. This caveat defeats the initial goal to not reveal any information to human eyes, so I decided to just straightforwardly show which character is currently being selected. Another reason for this change is that directly showing will require less user interaction and will be a more immediate feedback to user.

However, I still strongly believe in the first persistence of vision idea and would like to pursue that. I do not think that asking user to pull up their camera is another extra course of action, instead, it's enforcing the significance of taking picture and how it can reveal other information.

User Testing

A funny incident where the ghost is addressing our Indian gay tester. The ghost provides information that its favorite color is India, it is NEAR gay, it is male, and it comes from Netherlands.

Elizabeth Kezia Widjaja © 2026 🙂