A documentation site for the continuous captures taken at the Amorphous Body party, commissioned for Asian Avant-Garde Film Festival (2024) at M+ Museum, Hong Kong.

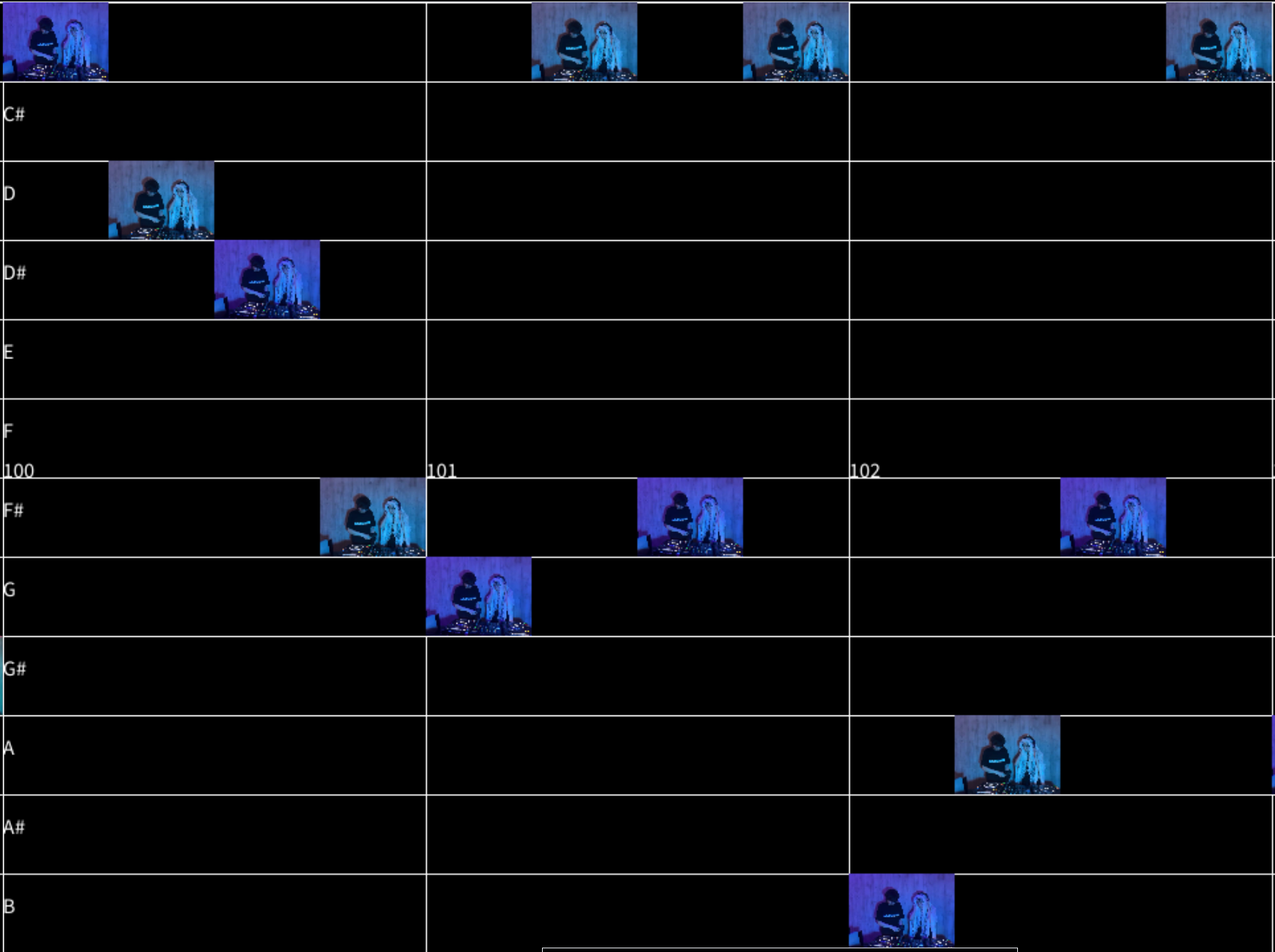

MIDI-Graph visualizes live sound input as a continuously evolving field of images, where each camera-captured frame becomes a “note” on a pitch-amplitude grid. The system is inspired by the interface logic of MIDI editors in digital audio workstations — yet instead of rendering simple rectangular notes, it substitutes them with real-time video captures, transforming sound into a visual memory of the moment it was heard.

Horizontal scrolling on a super long capture of a 3-hour event

⚙️ Technical Overview

Developed in Processing, the program integrates OSC (Open Sound Control) input and live video capture:

- Pitch Detection → y-axis: Incoming MIDI pitch values (ranging between 36–83) are mapped vertically, determining the y-position of each captured video frame.

- Amplitude → x-axis: The loudness of the signal (amplitude) drives the x-position, simulating the duration and placement of a MIDI note on a timeline.

- Image Generation:

The Processing Video library continuously captures frames from a webcam. Each image is resized and composited within a dynamic MIDI grid, replacing conventional note rectangles with real, time-bound textures.

- OSC Integration: Pitch and amplitude data are streamed via OscP5 and NetP5, allowing external audio software or devices to control the system.

- Temporal Logic: The canvas is refreshed once the x-axis is filled, similar to how a sequencer loops over time — producing rhythmic, recursive layers of visualized sound.

The project subverts the sterile abstraction of digital sound interfaces by reintroducing the body and environment into the signal. Instead of MIDI notes as synthetic representations, the system records the real scene of their occurrence — embedding human gestures, lighting, and atmosphere into the score.

Each sound becomes both audible and visible, situating musical data in lived space. The output is a generative collage where sound, movement, and vision loop together — an indexical sequencer that plays time itself.

Captures of "Panic Library"

Special thanks to Aisha Causing, Michael, and Eunice Tsang

Elizabeth Kezia Widjaja © 2026 🙂